by Corry Shores

[Search Blog Here. Index-tags are found on the bottom of the left column.]

[Central Entry Directory]

[Computation Entry Directory]

[Deleuze Entry Directory]

Nerves transmit information electrically. (Rose 72b)when a signal passed down a nerve it did so as a wave of electricity, a pulse which passed from the cell body to the end of the nerve fibre. This pulse is the ACTION POTENTIAL. In today's terms, the language of the brain in which the neurons transmit information is electric. (72c)

whatever the magnitude of the initial excitation, provided it is above a certain THRESHOLD size, the size of the action potential does not alter. The shape and size of the wave [seen below] would be identical whatever the magnitude of the stimulus that caused it. This phenomenon of constancy of wave form and size is known as the ALL-OR-NONE LAW of axon transmission. (Rose 77b)

The axon carries one type of signal, and one only, faithfully and at some speed, from one end to the other. It cannot even have the luxury of the variety of a morse-code operator, who can at least vary dots and dashes. The axon's language is entirely one of dots. (Rose 77b)

How then can more than the crudest sort of yes/no information be transferred along the axon? The one aspect of the axonal signal which is modifiable, despite these constraints, is the FREQUENCY of the response. The axon could transmit no action potential, or one a minute, or one a second, or a hundred a second, or a fluctuating number, all depending on the nature of the incoming message. It is the frequency of signal which functions as the basis of the axonal information transfer system. (77bc, emphasis mine)

Since the discharge of each new segment of the axon is always complete, the intensity of the transmitted signal does not decay as it propagates along the nerve fibre. One might be tempted to conclude that signal transmission in the nervous system is of a digital nature: a neuron is either fully active, or it is inactive. However, this conclusion would be wrong, because the intensity of a nervous signal is coded in the frequency of succession of the invariant pulses of activity, which can range from about 1 to 100 per second. The interval between two electrical spikes can take any value (longer than the regeneration period), and the combination of analog and digital signal processing is utilized to obtain optimal quality, security, and simplicity of data transmission. (Müller et al. 5b)

voltage fluctuations associated with dendrosomatic synaptic activity propagate significant distances along the axon, and that modest changes in the somatic membrane potential of the presynaptic neuron modulate the amplitude and duration of axonal action potentials and, through a Ca2+-dependent mechanism, the average amplitude of the postsynaptic potential evoked by these spikes. These results indicate that synaptic activity in the dendrite and soma controls not only the pattern of action potentials generated, but also the amplitude of the synaptic potentials that these action potentials initiate in local cortical circuits, resulting in synaptic transmission that is a mixture of triggered and graded (analogue) signals. (McCormick et.al, abstract, emphasis mine)

Messages arrive at the synapses from the axon in the form of action potentials.The variations permitted to the action potentials are neither in magnitude nor form, but only frequencies. Not much plasticity about the axon; it either does or doesn't fire. It is to the synapses that we must look to begin to introduce the element of variety which must be the key to the nervous system, which must distinguish it from an inevitable system in which, for a given input, there is an invariant output response. For it is at the synapses that it will be decided whether or not the next cell will fire in its turn. The synapse is a type of valve or gate. If the post-synaptic cell fired invariantly whenever an action potential arrived down the axon from the propagating cell, there would be no point in having a synapse. The axon might as well continue unbroken to the end of the axon of the second cell. No modification could ever occur. The axon has no choice but to fire. At the synapses between cells lies the choice point which converts the nervous system from a certain, predictable and dull one into an uncertain, probabilistic and hence interesting system. Consciousness, learning and intelligence are all synapse-dependent. It is not too strong to say that the evolution of humanity followed the evolution of the synapse. (Rose, 78-79, emphasis mine)

the axonal signals arriving at the synapse in the form of action potentials of particular frequencies are translated by the synapse into signals for the triggering of a chemical mechanism. The release of particular chemical substances which diffuse across the gap between the pre- and post-synaptic membranes and interact at the post-synaptic side with particular receptors, thereby triggers a sequence of post-synaptic events which may result in due course in the firing of the post-synaptic neuron. (81bc)

The post-synaptic membrane is not indiscriminately flooded with transmitter in response to an axonal signal. Instead, it is being continually bombarded with packages, each containing a prescribed amount of transmitter. (Rose 81a)

Once released into the gap, the transmitter diffuses across to the post-synaptic side. The time taken to do so, the delay in transmission, is in neuronal terms quite substantial. From 0.3 to 1.0 milliseconds may be involved. The cleft, although almost unimaginably narrow by nonmicroscopic standards, still represents a journey many times the length of a transmitter molecule. While on this journey, the transmitter is vulnerable, as we shall see later, to chemical attack. (Rose 85b)

A change in the membrane structure results in a change in permeability to the entry and exit of ions, and hence produces a change of polarization. (Rose 85c)

In the case of an excitatory transmitter the change in polarization is a decrease of the resting potential towards zero – a depolarization. If the depolarization goes beyond a certain threshold, as will be clear from what was said earlier about the propagation of an action potential, a wave of depolarization will be triggered; the post-synaptic neuron will fire. It has, however, to reach that threshold. How can this be achieved? The action potential is an all-or-none phenomenon. The axon either transmits or it does not, and if it does not, the initial stimulus merely dies away. At the post-synaptic membrane though, this need not be the case. The arrival of a small amount of transmitter – a quantum say – will cause a small depolarization, not enough to trigger an action potential which will spread, but enough to be measured as a miniature EXITATORY POST-SYNAPTIC POTENTIAL (EPSP)If more transmitter arrives, before the first EPSP has had time to decline, either a short distance away in space at an adjacent synapse, or a short period away in time at the same synapse, the effect of the second quantum of transmitter will be added to that of the first; SUMMATION occurs. If enough quanta of transmitter arrive within a circumscribed area or time the summation will build up until the threshold for the action potential is reached. The trigger will be pulled; the cell will fire an impulse down its own axon. The receptors allow the post-synaptic neuron to compute the strength of the signal arriving at it; if it is merely the continuous random release of a few quanta of transmitter, the EPSPs will rarely be of sufficient magnitude for the cell to fire. If the quanta of transmitter arrive fast enough, firing will occur. (Rose 85-86b, emphasis mine)

Synapses can say No as well as Yes. They can inhibit as well as excite the post-synaptic neuron. (Rose 88c)Just as the excitatory transmitter exerts its effect by causing a membrane depolarization, an excitatory post-synaptic potential, so the inhibitory transmitter functions by increasing the polarization (negativity) of the post-synaptic cell. This is called HYPERPOLARIZATION, and is measured as a minature INHIBITORY POST-SYNAPTIC POTENTIAL (IPSP) (88-89)

On the myriad branching dendrites and the cell body of a single neuron there may be up to ten thousand synapses. Each of these synapses is passing information to the post-synaptic neuron, some by signaling with excitatory post-synaptic potentials, some with inhibitory post-synaptic potentials; for some the signal is one of silence, the absence of an IPSP for instance. Each incoming EPSP or IPSP will carry its message to the post-synaptic cell in a wave of depolarization or hyperpolarization. How far the wave will spread down the dendrite and the membrane of the cell body will depend upon the sum of all the incoming signals at that given time. (88-89)

Messages flow from the dendrites and cell body towards the axon like water from the tributaries of a river. Although the axon in isolation can conduct in either direction, in real life it is a one-way system; the action potential begins at a point where in a typical neuron the cell body narrows down towards the axon. Whether the axon fires or not depends on the sum of all the events arriving at the point at a given time. To these events, computed as a result of signals which may be arriving at far distant dendrites, the axon will respond by firing only if the depolarization climbs over the threshold. (Rose 90a, emphasis mine)

This is a certain, deterministic response: the uncertainty of the system arises from the computation of the events within and along the dendrites and cell body; the certainty of the arrival of particular EPSPs and IPSPs from some synapses, and the uncertainty of the chance bombardment by spontaneous transmitter release from others. Nor has each synapse or each cell equal weight in such interactions. Detailed analysis of some synaptic interactions shows that in the arrangement of synapses in some parts of the brain, the axon from one cell lies closely intertwined along the dendrite of a second cell, making not just one, but thousands of synaptic connections with it along its length. The two, axon and dendrite, twist around each other like bindweed around brambles, with synapses at each of the thorns of the bramble - the dendritic spines. Signals from such an axon should 'matter more' to the recipient dendrite than those from an axon which makes but a few contacts. (Rose 89-90c, emphasis mine)

The axon → synapse → dendrite → cell body arrangement of neurons allows pathways of connected cells to be assembled. Thus if several neurons are connected synaptically, one with the other, they make a linear pathway. (Rose 96c, some emphasis mine)

Finally the pathway, or part of it, can bend backwards on itself to form a net. Nets can be made with three, or even two neurons. (Rose 97b)

If we assume just four neurons, each making but one synapse, the number of possible nets available is eleven. If it is recalled that each synapse can either be inhibitory or excitatory, then the number of interaction of even this simple network becomes quite large. If we were to include even a fraction of the real number of synapses which may be involved it is clear that the number of interactions between four neurons becomes so large as to be almost impossible to compute. Obviously, as the number of cells increases, so does the complexity of the system. (Rose 97-99)

Pathways go from somewhere to somewhere else, serving ... to gather information, in the form of an AFFERENT (incoming) signal, from a sense receptor and to transmit it as an EFFERENT (outgoing) signal by a nerve which is connected to a muscle or gland, so that the effector organ can be instructed as to how to do its job – to contract more or less, or to secrete more or less. (Rose 99b)

a system for refining and modifying those instructions. These INTERNEURONS (those connected only to each other and not to sense organs or effectors) are of value because of what they add to the information on which the instruction is based, by virtue of their capacity to compare it with past information, and draw conclusions. (Rose 99c)

a fleshy, creeping underground stem by means of which certain plants propagate themselves. Buds that form at the joints produce new shoots. Thus if a rhizome is cut by a cultivating tool it does not die, as would a root, but becomes several plants instead of one (The Columbia Electronic Encyclopedia)

These are indicators, as we shall see, of the plasticity of the brain, its capacity to modify its structure and performance in response to changing external circumstances. (Rose 92bc)

La pensée n'est pas arborescente, et le cerveau n'est pas une matière enracinée ni ramifiée. Ce qu'on appelle à tort «dendrites» n'assurent pas une connexion des neurones dans un tissu continu. (Mille plateaux 24b)Thought is not aborescent, and the brain is not a rooted or ramified matter. What are wrongly called «dendrites» do not assure the connection of neurons in a continuous fabric. (A Thousand Plateaus 17b)

in large regions it is difficult to see neurons at all. Although some entire layers are almost filled with cell bodies and others with their twisting dendritic and axonal processes, embedded within them or closely adjacent to the neurons themselves lie quite different cells, smaller than the neuron, either unbranched or surrounded with an aura of short stubby processes like a sea urchin. (Rose 70bc)

These cells are knows as GLIA (from the Latin for «glue») for they appear to stick and seal up all the available space in the cortex, outnumbering the neurons by about ten to one. (Rose 70c)

la consistance réunit concrètement les hétérogènes, les disparates, en tant que tels : elle assure la consolidation des ensembles flous, c'est-à-dire des multiplicités du type rhizome. (Mille plateaux 632d)consistency concretely ties together heterogeneous, disparate elements as such: it assures the consolidation of fuzzy aggregates, in other words, multiplicities of the rhizome type. (A Thousand Plateaus 558)

La discontinuité des cellules, le rôle des axones, le fonctionnement des synapses, l'existence de micro-fentes synaptiques, le saut de chaque message par-dessus ces fentes, font du cerveau une multiplicité qui baigne, dans son plan de consistance ou dans sa glie, tout un système probabiliste incertain, uncertain nervous system. (Mille plateaux 24b)The discontinuity between cells, the role of the axons, the functioning of the synapses, the existence of synaptic microfissures, the leap each message makes across these fissures, make the brain a multiplicity immersed in its plane of consistence or neuroglia, a whole uncertain, probabilistic system («the uncertain system»). (A Thousand Plateaus 17)

Beaucoup de gens ont un arbre planté dans la tête, mais le cerveau lui-même est une herbe beaucoup plus qu'un arbre. «L'axone et la dendrite s'enroulent l'un autour de l'autre comme le liseron autour de la ronce, avec une synapse à chaque épine.» (Mille plateaux 24bc)Many people have a tree growing in their heads, but the brain itself is much more a grass than a tree. «‹The axon and dendrite twist around each other like bindweed around brambles, with synapses at each of the thorns› of the bramble – the dendritic spines.» (A Thousand Plateaus. D&G's citation is in single quotes, p17, the rest of the sentence is in double quotes, Rose p.90c .)

The point of major vulnerability of the nervous system to exogenous drugs or other agents is the cleft between the pre- and post-synaptic cells. The dendrites and the membrane of the cell body are concerned with computing the information arriving at them, in terms of transmitter, from all the synapses. Depending on the sum of this activity at any given time, the stimulus at the axon will climb above the threshold necessary for it to fire. (Rose 93b, emphasis mine)

The uncertain, probabilistic nature of the nervous system depends on this computing function of the dendrites and cell body. Information in the form of excitatory or inhibitory transmitter arrives at them as a result of three types of event: (a) firing in a nerve which synapses upon the dendrites or cell body in question in response to events external to it in the rest of the nervous system or in the environment; (b) the spontaneous firing of the pre-synaptic nerve in response to events internal to it; and (c) the quantal release of small amounts of transmitter without axonal impulses to trigger them. Of these three types of stimulus which the dendrites and cell body must compute, only the first is always certain and predictable at the hierarchical level of the system. The second may be determinate, but it may also occur as a result of events which, at the present level of our knowledge, appear random, indeterminate and probabilistic. The third appears, in so far as present knowledge goes, to be a random, probabilistic event. Thus it would seem that the synaptic structure of the nervous system is such as to make its functioning only partially predictable. Even if the mode of computation of the dendrites and cell body is itself deterministic, albeit complex, in terms of the 'weighting' given to particular synapses, they are none the less computing on the basis of information which appears to have a measure of indeterminacy built into it. It is this feature which accounts in part for the considerable spontaneous activity of the brain and which, as we shall see, is of importance when we come to consider the predictability of the responses of the brain. (93b-d)

"You'd think this is crazy because engineers are always fighting to reduce the noise in their circuits, and yet here's the best computing machine in the universe—and it looks utterly random."

"'Oh, it's not noise,' [some claim] 'because noise implies something's wrong," says Pouget. "We started to realize then that what looked like noise may actually be the brain's way of running at optimal performance."

Our minds are experts at making probabilistic inferences [Baysian computations]

"The cortex appears wired at its foundation to run Bayesian computations as efficiently as can be possible," says Pouget. His paper says the uncertainty of the real world is represented by this noise, and the noise itself is in a format that reduces the resources needed to compute it.

Imagine that we are being chased by a wild animal through the woods. We too have become like wild creatures, making spontaneous, instinctive and rapid decisions as to where to go and how to get there. Our minds are flooded with chaos. We are wildly absorbing inflowing sense-data. We come to a stream that we might not be able to leap over. How do we decide without taking extra time to perform mathematical inferences?

Pouget now prefers to call the noise "variability." Our neurons are responding to the light, sounds, and other sensory information from the world around us. But if we want to do something, such as jump over a stream, we need to extract data that is not inherently part of that information. We need to process all the variables we see, including how wide the stream appears, what the consequences of falling in might be, and how far we know we can jump. Each neuron responds to a particular variable and the brain will decide on a conclusion about the whole set of variables using Bayesian inference.

As you reach your decision, you'd have a lot of trouble articulating most of the variables your brain just processed for you. Similarly, intuition may be less a burst of insight than a rough consensus among your neurons.

Their papers explain that neurons do not respond consistently to the same information. But this is precisely what makes them so effective. Their irregularities allow our minds to make quick assessments with reasonable margins of error calculated-in. It would take much too long to compute the precise dimensions of the stream. Instead, our minds are flooded with a rush of variant noisy information, and out of it we almost instantaneously come to rough approximations of the world around us.

We turn now to Catastrophe Theory, to guide our understanding of Deleuze's theory of thought and sensation.

Recall that Rose writes:

whether the axon fires or not depends on the sum of all the events arriving at the point at a given time. To these events, computed as a result of signals which may be arriving at far distant dendrites, the axon will respond by firing only if the depolarization climbs over the threshold. (Rose 90a, emphasis mine)

In Chaos & Complexity, Brian Kaye offers the example of the dripping faucet. The tap is not completely closed. So a tiny amount of water trickles through and gathers at the opening.

The shape of the drop depends upon the competition between the forces of gravity and the surface tension forces holding the drop to the tap. (Keye 516-517, emphasis mine)

Suddenly it breaks away. The breaking away of the drop is the catastrophe which is the abrupt change arising as a sudden response to the cumulative effect of the smooth supply of small amounts of water added to the drop by the flow through the leaking valve in the tap. The size of the drop when it breaks away is a consequence of the interaction of several variables – environmental vibration, wind drafts, consistency of supply through the leaking valve, the roughness or smoothness of the edge of the tap, the purity of the liquid dripping from the tap and local heat changes. (517b)

Yet we cannot know all these factors. We can only find the average rate of fall, but we can never predict the next drop's time and size. An enormous number of small and highly-variable factors must coalesce to produce the unpredictable event, the catastrophe.

We see that from this perspective, unpredictability arises from a «deterministic chaos» that is so complex we can only deal with it statistically. The catastrophe Deleuze speaks-of might not even result from extraordinary complexity. It might in fact result from pure chance itself, independent of any determinism whatsoever.

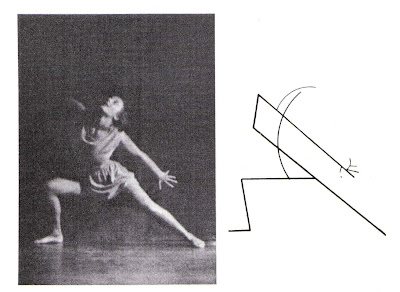

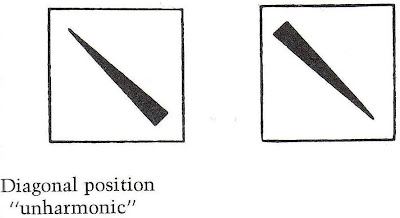

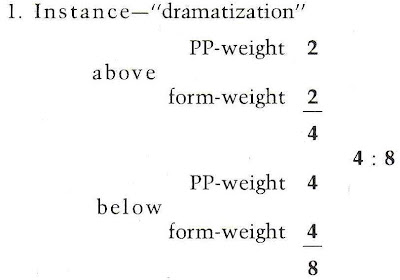

What we see is that the catastrophe in catastrophe theory is a formational process. But rather for Deleuze, the catastrophe is a deformational process. He explains that digital aesthetic communication involves our using coded formalizations. For example, Kandinsky uses such binary coded formalities.

Analog, however, uses wild, bodily probabilistic movements. Consider for example Pollock's action art.

Deleuze writes that Bacon begins digital, then introduces the catastrophe or dice throw by making random analog body motions, like throwing paint or smearing it. This blends order and chaos.

[See this entry or this one for a more detailed explanation of analog and digital aesthetic communication.] If we merely process nervous signals like digital computers, there would be no need for the synapses, according to Rose. Our nervous systems are probabilistic computers that benefit from noise, disruptions, and chaos. Consider when we received a nervous signal from our senses, and we processed it normally in our minds, then reacted appropriately. This could be the same process the cow undergoes when seeing the grass, recognizing it, and eating it. Is this thinking? It's not for Deleuze.

Consider that there is a rhythm to our nerve impulses. There are digital pulses that are analogically modulated. For, the discrete signal-bursts vary continuously in their frequency of fire. Now consider when you play music from some sort of a tape medium, perhaps on film or cassette (or eight-track, reel-to-reel, etc.), or consider if you turn the record turn-table faster or slower with your hand. The sounds and the music's rhythm will continuously stretch and deform to degrees determined by the speed of its changes. This is not possible in digital, by the way. If you feed the code slower, that does not stretch the sound out slower in a continuous way. It must pause until the rest of the code arrives. But in analog there can be continuous variation in speeds. This is one way that we may communicate «intensities.»

First consider the «instantaneous velocity»of a discharged bullet. We may examine some point along its trajectory. And, we may look at some non-extending moment of time during which it does not move an extent of distance. Nonetheless, in that instant, it had a tendency to go so fast. But this tendency is different from moment to moment. The more unpredictable such changes in speed-tendencies are, the more intense the motion is, for Deleuze. But this makes more sense when we take into account sensation and thinking. So for example something causes us nervous stimulation, but in an irregular way. At times it speeds up, and at other times it slows down. Its continuous variation is irregular and unpredictable. It could be merely one stimulus. But it nonetheless can have internal heterogeneity. It can internally differ continuously with itself in respect to its speed changes. [See this entry for more illustration.]

As Rose has explained, so much of the brain's computations revolves around the speeds of the impulses. When our skin feels something at a certain pressure, there is a relatively consistent pulse pattern. Changes in the pattern tell us about changes in the cause. But if those speeds vary unpredictably, that will throw the whole brain's computation system into haywire. Certain neurons that would normally fire at a certain moment will not, because the stimulus slowed down. But other parts of the system are expecting it, because they already were set to expect the alteration that did not come. We also saw the complex looping networks. All this confusion spreads instantly through the nervous circuitry. In other words, when looking at a Bacon painting, our brains are thrown into chaos. And because the nerve impulses continue to defy expectations, on account of their unpredictably varying speeds, our minds never come to fully compute what we sense. The impulses circulate wildly and madly through our brains, but also through all the other extensions of the nervous system, throughout our bodies. Hence the nausea or dizziness we might feel.

If all we do is see an object that affects us in a regular expected way, then we are neither having a real sensation nor are we thinking. But when the pulses traveling through our nervous system fall out of synchrony, then this is «the rhythm of sensation.» If there is no sensation of changing change, then there is no internal sensation of movement. For, there is no feeling of our sensations themselves having movement. And without movement, there is no rhythm. So only unpredictable dyssynchronous patterns are rhythmic, and only they cause us to sense and think.

Consider also that if all we sensed were things that were ever the same. Our brains would not need new connections to detect and recognize them. However, when there is intensity to the signal (unpredictable alterations of speed changes), the forces of the impulses might make new adjustments in the connections, structure, and functioning of the brain.

When the nervous impulses are traveling through our bodies in such a chaotic way, there is no knowing what «instructions» they will give to our muscles. Our body will cease to operate in its normal organic way. It will instead become a body without organs.

What kind of random?

Digital Inaccuracy vs. Analog noise

To further our investigations, we will try to articulate the nature of the randomness involved in our neural chaos. There are different sorts of random, and various ways to produce it. Specifically, we would like to know if the random variations necessary for neurogenesis can be simulated mathematically. My hypothesis is that to simulate human consciousness artificially, we will need to use analog noise. Digital inaccuracies or random variations are limited to a finite number of possibilities. But analog noise can take on any of an infinity of possible values. So analog noise is infinitely rich in the ways it can steer our neurocomputations in new directions. And in fact, there is research that suggests analog noise is necessary for learning.

A.F. Murray tested digital vs analog interferences in neural networks charged with learning tasks.

Analogue techniques allow the essential neural functions of addition and multiplication to be mapped elegantly into small analogue circuits. The compactness introduced allows the use of massively parallel arrays of such operators, but analogue circuits are noise-prone. This is widely held to be tolerable during neural computation, but not during learning. In arriving at this conclusion, parallels are drawn between analogue 'noise' uncertainty, and digital inaccuracy, limited by bit length. This, coupled with dogma which holds that high (≃16 bit) accuracy is needed during neural learning, has discouraged attempts to develop analogue learning circuitry, although some recent work on hybrid analogue/digital systems suggests that learning is possible, if perhaps not optimal, in low (digital) precision networks. In this Letter results are presented which demonstrate that analogue noise, far from impeding neural learning, actually enhances it. (Murray 1546.1a, emphasis mine)

Learning is clearly enhanced by the presence of noise at a high level (around 20%) on both synaptic weights and activities. This result is surprising, in light of the normal assertion alluded to above that back propagation requires up to 16 bit precision during learning. The distinction is that digital inaccuracy, determined by the significance of the least significant bit (LSB), implies that the smallest possible weight change during learning is 1 LSB. Analogue inaccuracy is, however, fundamentally different, in being noise-limited. In principle, infinitesimally small weight changes can be made, and the inaccuracy takes the form of a spread of 'actual' values of that weight as noise enters the forward pass. The underlying 'accurate' weight does, however, maintain its accuracy as a time average, and the learning process is sufficiently slow to effectively 'see through' the noise in an analogue system. (1547.2.bc, emphasis mine)

The further implication is that drawing parallels in this context between digital inaccuracy and analogue noise is extremely misleading. The former imposes constraints (quantisation) on allowable weight values, whereas the latter merely smears a continuum of allowable weight values. (1547-1548, emphasis mine)

Being "wrong" allows neurons to explore new possibilities for weights and connections. This enables us to learn and adapt to a chaotic changing environment. Using mathematical or digital means for simulating neural noise might be inadequate. Analog is better. For, it affords our neurons an infinite array of alternate configurations. In a later entry, we will discuss this further.

Marieb, Elaine Nicpon & Katja Hoehn. Human Anatomy & Physiology.

Preview and more information at:

.jpeg)

This is great.

ReplyDeleteThanks! I added your blog to the roll, so now I can follow your latest posts.

Delete